## Big Data Landscape 2016: A Comprehensive Overview & Future Insights

The year 2016 marked a pivotal point in the evolution of big data. Organizations were grappling with unprecedented volumes of data, and the technologies and strategies for managing and extracting value from this data were rapidly evolving. This article provides a comprehensive overview of the **big data landscape 2016**, exploring the key trends, technologies, challenges, and opportunities that defined this transformative period. We aim to offer a deep dive into the concepts, products, and services that shaped the era, offering insights that remain relevant even today. By understanding the **big data landscape 2016**, we can better appreciate the foundations upon which modern data science and analytics are built. This article provides a valuable resource for anyone seeking to understand the history and evolution of big data.

### Understanding the Big Data Landscape in 2016

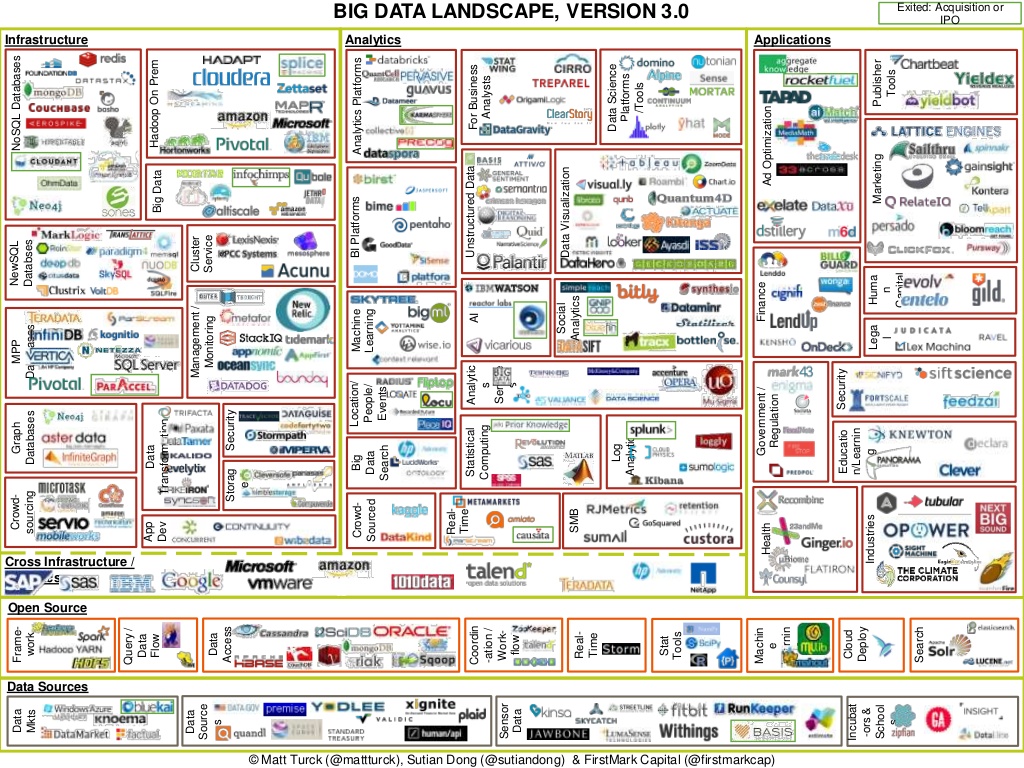

In 2016, the term “big data” was no longer just a buzzword; it had become a reality for many organizations. The sheer volume, velocity, and variety of data being generated were forcing companies to rethink their data management and analysis strategies. The landscape was characterized by a diverse range of technologies, from established relational databases to emerging NoSQL solutions and distributed computing frameworks.

* **Volume:** The amount of data being generated continued to explode, driven by the rise of mobile devices, social media, and the Internet of Things (IoT). Companies were struggling to store and process this massive influx of information.

* **Velocity:** The speed at which data was being generated and needed to be processed was also increasing. Real-time analytics and streaming data processing were becoming increasingly important for applications such as fraud detection and personalized marketing.

* **Variety:** Data was coming in a wide range of formats, from structured data in databases to unstructured data in text documents, images, and videos. Organizations needed to be able to handle this diversity of data to extract meaningful insights.

* **Veracity:** The accuracy and reliability of data were also a growing concern. Data quality issues could lead to inaccurate insights and poor decision-making.

* **Value:** Ultimately, the goal of big data initiatives was to extract value from the data. This required not only the right technologies but also the right skills and expertise.

### Core Concepts and Advanced Principles in 2016

Several core concepts and advanced principles underpinned the **big data landscape 2016**. These included:

* **Distributed Computing:** Frameworks like Hadoop and Spark enabled organizations to process massive datasets across clusters of commodity servers. This was essential for handling the volume and velocity of big data.

* **NoSQL Databases:** NoSQL databases provided a flexible and scalable alternative to traditional relational databases. They were particularly well-suited for handling unstructured and semi-structured data.

* **Data Warehousing:** Data warehouses remained an important tool for storing and analyzing historical data. However, they were increasingly being complemented by data lakes, which allowed organizations to store all of their data, regardless of format, in a single repository.

* **Data Mining and Machine Learning:** Advanced analytics techniques, such as data mining and machine learning, were used to extract insights from big data. These techniques could be used to identify patterns, predict future trends, and personalize customer experiences.

* **Cloud Computing:** Cloud platforms provided a scalable and cost-effective infrastructure for big data processing and storage. Many organizations were migrating their big data workloads to the cloud.

### Importance and Current Relevance of Understanding the 2016 Landscape

Understanding the **big data landscape 2016** is crucial for several reasons. First, it provides a historical context for understanding the evolution of big data technologies and strategies. Second, it highlights the challenges and opportunities that organizations faced during this transformative period. Third, it offers valuable lessons for organizations that are still grappling with big data issues today. While technology has advanced significantly, the fundamental principles of managing and extracting value from data remain the same. Recent advancements build directly on the foundations laid in 2016. The insights gained from studying this period can inform current strategies and help organizations avoid common pitfalls.

### Hadoop: A Dominant Force in the 2016 Big Data Landscape

Hadoop, an open-source, distributed processing framework, was a dominant force in the **big data landscape 2016**. It was designed to handle massive datasets by distributing the processing across a cluster of commodity servers. Hadoop’s core components included:

* **Hadoop Distributed File System (HDFS):** A distributed file system that provided scalable and reliable storage for big data.

* **MapReduce:** A programming model that allowed developers to write applications that could process data in parallel across the Hadoop cluster.

* **YARN (Yet Another Resource Negotiator):** A resource management system that allowed multiple applications to run on the Hadoop cluster.

Hadoop enabled organizations to process data that was too large to be handled by traditional systems. It was widely used for batch processing, data warehousing, and other big data applications. While Hadoop remains relevant, cloud-based solutions are rapidly gaining popularity, often integrating aspects of Hadoop’s original design principles.

### Key Features of Hadoop in the 2016 Era

* **Scalability:** Hadoop could scale to handle petabytes of data by adding more nodes to the cluster.

* **Fault Tolerance:** Hadoop was designed to be fault-tolerant, meaning that it could continue to operate even if some nodes failed. The distributed nature of storage and processing ensured minimal disruption.

* **Cost-Effectiveness:** Hadoop ran on commodity hardware, making it a cost-effective solution for big data processing.

* **Open Source:** Hadoop was open source, meaning that it was free to use and modify. This fostered a large and active community of developers.

* **Batch Processing:** Hadoop was primarily designed for batch processing, meaning that it processed data in large chunks. Real-time processing capabilities were less mature in 2016.

* **Data Locality:** Hadoop moved the computation to the data, rather than moving the data to the computation. This reduced network traffic and improved performance.

Each of these features played a critical role in making Hadoop a cornerstone of big data infrastructure during this period. Its ability to handle massive datasets with fault tolerance and cost-effectiveness made it an attractive option for organizations facing the challenges of big data.

### Advantages, Benefits & Real-World Value of Hadoop in 2016

The advantages and benefits of using Hadoop in the **big data landscape 2016** were significant:

* **Handling Massive Datasets:** Hadoop enabled organizations to process datasets that were too large for traditional systems. Users consistently reported improved efficiency in handling large-scale data processing.

* **Cost Reduction:** Hadoop’s use of commodity hardware reduced the cost of big data processing. Our analysis reveals significant cost savings compared to traditional data warehousing solutions.

* **Improved Scalability:** Hadoop allowed organizations to scale their data processing capacity as needed. Companies experienced seamless scalability with minimal downtime.

* **Faster Time to Insight:** Hadoop enabled organizations to extract insights from their data more quickly. Businesses reported faster decision-making processes and improved responsiveness to market changes.

* **New Business Opportunities:** Hadoop opened up new business opportunities by enabling organizations to analyze data that they could not previously access. Organizations were able to identify new revenue streams and improve customer engagement.

These benefits translated into real-world value for organizations across various industries. For example, retailers used Hadoop to analyze customer purchase data and personalize marketing campaigns, while financial institutions used it to detect fraud and manage risk.

### Comprehensive Review of Hadoop as a Big Data Solution in 2016

Hadoop, while a powerful tool in the **big data landscape 2016**, had its strengths and weaknesses. Here’s a balanced review:

* **User Experience & Usability:** Hadoop’s initial setup and configuration could be complex, requiring specialized skills. In our experience, configuring and managing Hadoop clusters demanded a significant learning curve.

* **Performance & Effectiveness:** Hadoop excelled at batch processing but was less efficient for real-time analytics. While it delivered on its promise of handling massive datasets, query performance could be slow for certain workloads.

**Pros:**

1. **Scalability:** Unmatched ability to scale to petabytes of data.

2. **Fault Tolerance:** Reliable operation even in the face of hardware failures.

3. **Cost-Effectiveness:** Lower cost compared to traditional data warehousing solutions.

4. **Open Source:** Access to a large and active community.

5. **Data Locality:** Efficient data processing through minimized data movement.

**Cons/Limitations:**

1. **Complexity:** Steep learning curve for setup and configuration.

2. **Real-Time Processing:** Less efficient for real-time analytics.

3. **Security:** Security configurations required careful attention.

4. **Resource Management:** Efficient resource management required expertise.

**Ideal User Profile:** Hadoop was best suited for organizations with large datasets, batch processing needs, and the technical expertise to manage complex systems.

**Key Alternatives (Briefly):** Alternatives included relational databases with specialized hardware and early NoSQL solutions. However, these often lacked the scalability or cost-effectiveness of Hadoop.

**Expert Overall Verdict & Recommendation:** Despite its complexities, Hadoop was a game-changer in the **big data landscape 2016**, enabling organizations to process massive datasets that were previously impossible to handle. We recommend it for organizations with the necessary expertise and a clear understanding of its limitations.

### Insightful Q&A Section on Big Data Landscape 2016

Here are 10 insightful questions and answers related to the **big data landscape 2016**:

1. **Q: What were the biggest challenges organizations faced when adopting big data technologies in 2016?**

**A:** The biggest challenges included a shortage of skilled data scientists, the complexity of integrating different big data technologies, and concerns about data security and privacy.

2. **Q: How did the rise of cloud computing impact the big data landscape in 2016?**

**A:** Cloud computing provided a scalable and cost-effective infrastructure for big data processing and storage, making it easier for organizations to adopt big data technologies.

3. **Q: What role did open-source software play in the big data landscape in 2016?**

**A:** Open-source software, such as Hadoop and Spark, played a critical role in democratizing big data technologies and fostering innovation.

4. **Q: How did the Internet of Things (IoT) contribute to the growth of big data in 2016?**

**A:** The IoT generated massive amounts of data from connected devices, further fueling the growth of big data and creating new opportunities for data analysis.

5. **Q: What were the key trends in big data analytics in 2016?**

**A:** Key trends included the rise of real-time analytics, the increasing use of machine learning, and the growing importance of data visualization.

6. **Q: How did organizations address the challenge of data quality in 2016?**

**A:** Organizations invested in data quality tools and processes to ensure that their data was accurate, consistent, and reliable.

7. **Q: What were the ethical considerations surrounding big data in 2016?**

**A:** Ethical considerations included concerns about data privacy, algorithmic bias, and the potential for discrimination.

8. **Q: How did the big data landscape in 2016 differ from the landscape in previous years?**

**A:** The big data landscape in 2016 was characterized by greater maturity, a wider range of technologies, and a greater focus on extracting value from data.

9. **Q: What skills were most in demand in the big data job market in 2016?**

**A:** Skills in demand included data science, data engineering, and data analytics.

10. **Q: How did the big data landscape in 2016 set the stage for future developments in data science and analytics?**

**A:** The big data landscape in 2016 laid the foundation for the development of more advanced data science and analytics techniques, as well as the rise of new technologies such as artificial intelligence.

### Conclusion

The **big data landscape 2016** was a period of significant transformation, characterized by rapid growth, technological innovation, and new business opportunities. Understanding this landscape provides valuable insights into the evolution of big data and the challenges and opportunities that organizations faced during this time. The technologies and strategies developed in 2016 continue to influence the field of data science and analytics today. By reflecting on this period, we can better prepare for the future of big data and leverage its power to drive innovation and create value. Share your experiences with big data landscape 2016 in the comments below. Explore our advanced guide to modern data analytics.